Introduction

AI assistants are quickly becoming the next wave of software interfaces. Users can explain what they need in their own words and the assistant can access your system's data and capabilities to deliver results. That's where the Model Context Protocol (MCP) and GraphQL come in.

In this course, you'll learn how to connect the dots between your AI assistant and your backend data securely, ready for your enterprise needs using the Apollo MCP Server and GraphOS. You'll learn how a production-ready graph using persisted queries, introspection and contracts gives your assistant the structure and guardrails it needs to be helpful, without going rogue.

If you're new to MCP, we recommend starting with the courses "Getting started with MCP and GraphQL", where we introduce the protocol and explore its use in a development environment.

Check your plan: This course includes features that are only available on the following GraphOS plans: Developer, Standard, or Enterprise.

Recap on MCP ⚡

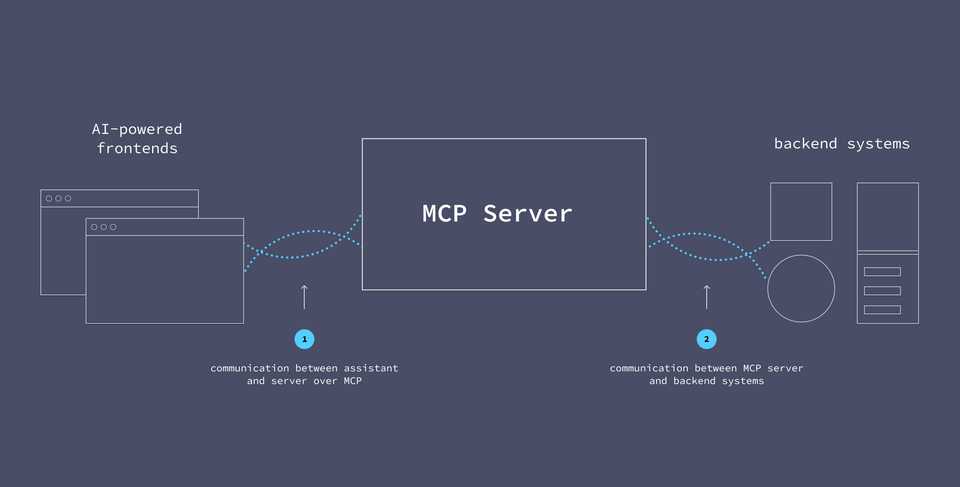

The Model Context Protocol is an emerging standard that defines how AI assistants and services communicate. An MCP server lets us build a bridge between our backend systems and the LLMs responsible for an increasing number of customer-facing interactions.

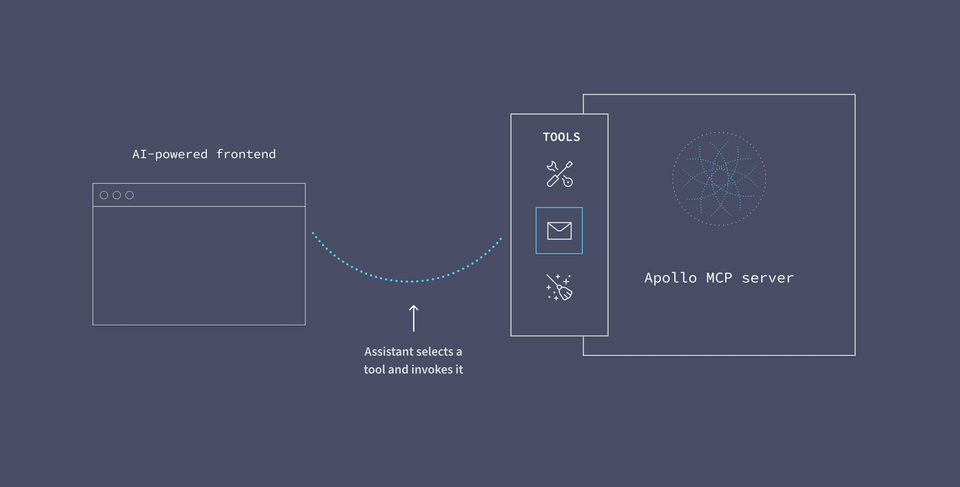

But the MCP server isn't just an extra stop along the way to data: it's where we can define the various actions we want AI assistants to have access to. We package these actions as tools. Each tool comes with a name and a description, along with any inputs the assistant would need to provide when "calling it". When a tool is called, the MCP server runs the actual logic packaged up behind it. For us, this usually means the MCP server sends a GraphQL operation to our API to be resolved. When it receives the response, the server hands it over to the LLM to continue the conversation with the user.

Tools help us restrict the actions an LLM is able to perform on its own. In most cases, we won't want AI assistants poking around our systems for their own answers. Tools give us a healthy balance: pre-approved operations let us control what is permitted to run in our system, while the context of a tool's name and description allows the AI assistant to decide when an operation should run.

MCP and production graphs

How and where we use MCP is an ongoing discussion in the industry, especially around authorization. It's important to consider what mission-critical data assistants are granted access to.

While MCP is still evolving as a standard, its potential is clear: we can use AI assistants to automate, process, and interpret data operations to enhance user experiences. As the protocol continues to mature, we can already begin integrating it with other key technologies, such as GraphQL, to unlock its capabilities.

MCP on GraphOS

When we equip our MCP server with a GraphOS-registered graph, we can take advantage of some really powerful features to both enhance and govern how AI assistants interact with system data.

In GraphOS, we can incrementally build our comprehensive API schema: the composed description of all the capabilities across all of our different APIs. It contains all the instructions about the bits of data we can request, and where each piece comes from. When we hand this schema to the GraphOS Router, the router is able to orchestrate requests across all our different domains, serving up responses for complex queries that can span multiple systems.

Note: If you're new to federated graphs and GraphOS, check out the course "Voyage I: Federation from Day One".

Key among these features are: persisted queries, schema introspection, and contracts.

Persisted queries

Persisted queries are collections of GraphQL operations that we define in advance for clients to use. Pre-defining these queries gives us more fine-grained control over exactly what data can be requested, without sacrificing flexibility when assistants attempt to answer users' questions. When we bundle our persisted queries as MCP tools, our AI assistants can treat them like options on an operation menu.

Introspection and contracts

Schema introspection lets an assistant explore GraphQL types and fields to create its own operations as needed. It's powerful, but can open up the graph to security concerns. To ensure security, we can use contracts: subsets of the schema that restrict what types and fields can be viewed and acted upon. We'll equip our assistant with introspection superpowers, kept in check by a contract in GraphOS.

Practice

Key takeaways

- The Model Context Protocol (MCP) is an emerging standard that defines how AI assistants and services communicate.

- GraphOS features secure and refine MCP capabilities through persisted queries, schema introspection, and contracts.

- Persisted queries are collections of GraphQL operations we define in advance for clients to use.

- Introspection allows an assistant to devise its own queries after reading the available types and fields in a schema. Contracts allow us to limit assistants to a subset of the schema, which gives us greater control over what kinds of queries it can devise.

Up next

Let's set up our graph and tools in the next lesson!

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.