Introduction

Integrations with AI are everywhere these days. The power of Large Language Models (LLMs) to interact with users, handle their questions, and solve problems has transformed the roadmap for nearly every app out there. But if we charge ahead without considering how AI assistants should get access to our backend data—not to mention, which data—we'll find ourselves in a sticky situation.

Even as we strive to adopt new technologies as quickly as possible, we can't ignore the security of our systems and data. So, moving quickly while staying secure is what this course is all about! In the lessons ahead, we'll get familiar with the Model Context Protocol (MCP), the new standard protocol for enabling communication between LLMs and backend services. And throughout this series, we'll explore how using GraphQL and the Apollo ecosystem can help us secure our development along the way.

We're going to bring all the pieces together, from APIs and AI assistants, to the MCP servers that make it all possible. So let's jump in!

What is MCP?

The Model Context Protocol is an emerging standard for enabling communication between AI assistants and services. MCP defines the rules, expectations, and formatting these components should follow when communicating with each other. Like HTTP for web pages, or DNS for domain names, MCP defines how communication occurs between assistants and services, letting us developers focus on what we're communicating.

To make the most of what AI assistants can offer, we need them to have access to data and capabilities. But we don't want to go ahead and build a bespoke integration. This is where MCP steps in to bridge the gap: with an MCP server, we can define a declarative interface of all the actions and functionality an AI assistant is permitted to perform.

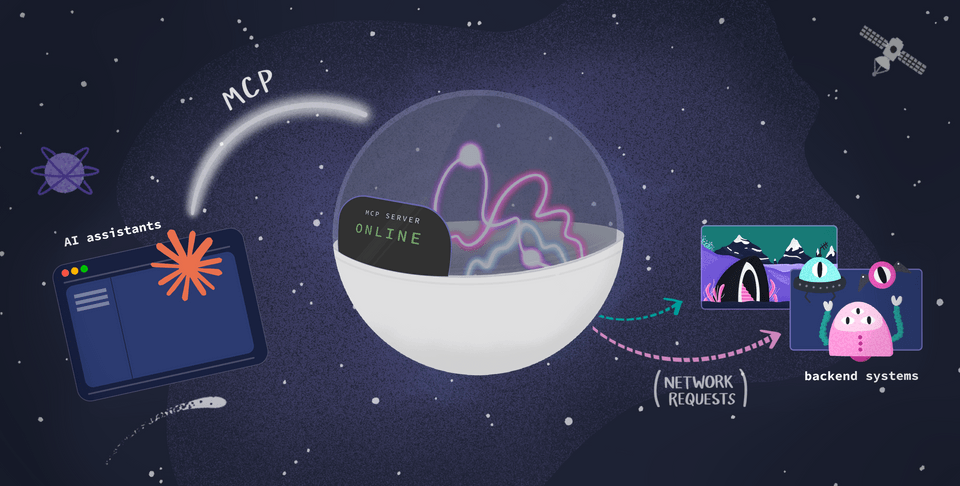

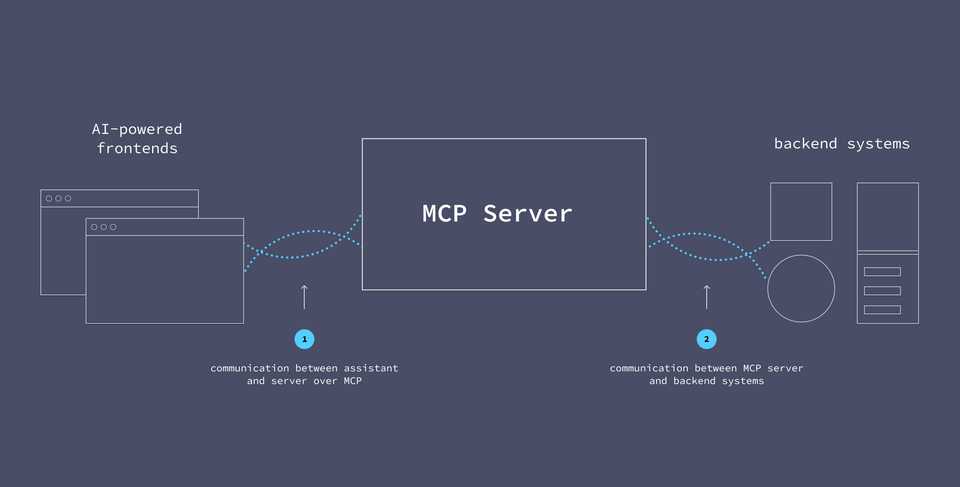

An MCP server is exactly what it sounds like: a server that uses the Model Context Protocol. Specifically, it uses this protocol to communicate with AI assistants. But, like a regular web-based server, it also has the ability to send requests across the network to backend services.

Sticking an MCP server between AI assistants and backend services lets us define the precise points of access an assistant has into a system. We do this by equipping the server with different actions an AI assistant is able to access and perform. When an assistant "calls" one of these actions, the MCP server runs or evokes the actual logic associated with it: an operation that we developers have defined ahead of time.

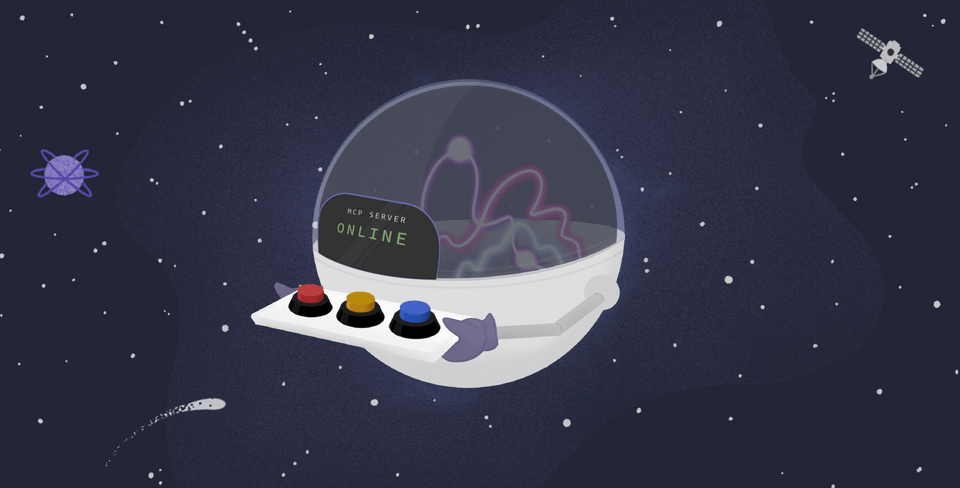

Because we've defined them in advance, these operations guide the assistant on what to do given a particular customer prompt. So instead of poking around only based on a LLM's training data, an assistant can resolve user requests by browsing a selection of pre-approved actions. We refer to these different actions as tools.

What are tools?

Think of tools as a collection of options the AI assistant can select from. You can picture these like options on a dashboard: the assistant is given some buttons to push, a few levers to pull, maybe even a couple of inputs. Each tool represents some action that can be performed within the system—for example, requests to read or update data.

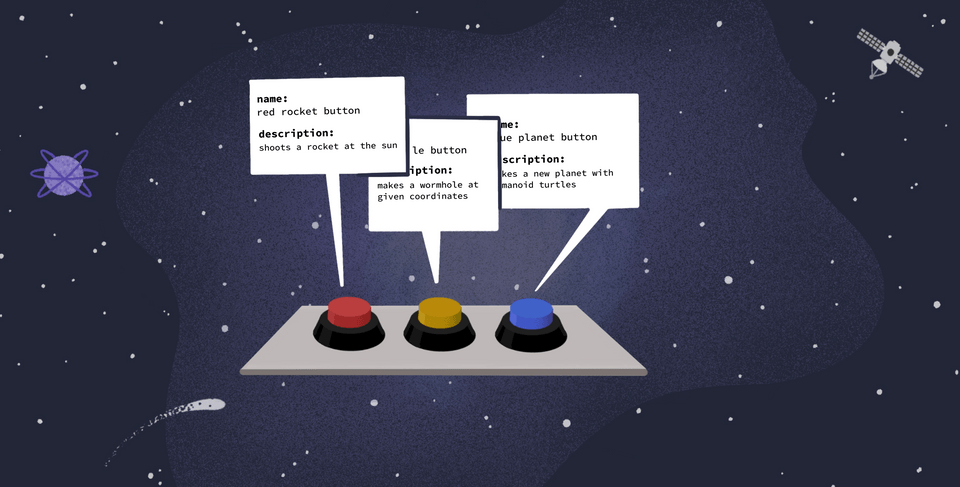

A tool's description helps the assistant understand its purpose. When powered by an LLM like Claude, the assistant can use its broad understanding of language and semantics to determine which tool is most appropriate when responding to a user's question.

But when it comes to what actually happens behind the scenes when a button is pushed, the assistant is no expert! Its job is to translate the user request and map it to the appropriate tool to call. It simply "calls" the tool in the MCP server and wait for the response, then use the data it receives to respond to the user's prompt.

Tools are defined as JSON objects in a configuration file within the MCP server. Basic tools will specify at least a name, a description, and inputSchema. The inputSchema defines the JSON schema for any required inputs the assistant needs to provide when calling the tool.

{tools: [{name: "red rocket button",description: "shoots a rocket at the sun",inputSchema: { ... }}]}

Additionally, tools can define optional annotations. These give the LLM hints about the tool's behavior, which can provide just a bit more information about what happens behind the scenes when the tool is used.

Note: We won't be specifying annotations in this course, but if you're interested, check out the official MCP documentation for reasons why you might consider them in the future.

We'll spend some time defining our own tools, though our MCP server of choice will make the process much easier—so stay tuned!

The bridge to data

When running an MCP server, there are two connection points that we need to configure. The AI assistant needs to be able to talk to the MCP server; and the MCP server needs to talk to our backend services or APIs. In this way, the MCP server acts as a bridge between the two.

In the lessons ahead, we'll configure both of these connections. We'll first connect our MCP server to a GraphQL API (where our data lives) and design our first operation, which will become our assistant's first tool. Then we'll hook up the AI assistant to the MCP server. We can then ask the assistant a question that prompts it to reach into its MCP toolbox and find the right tool for the job.

Introducing the Apollo MCP Server

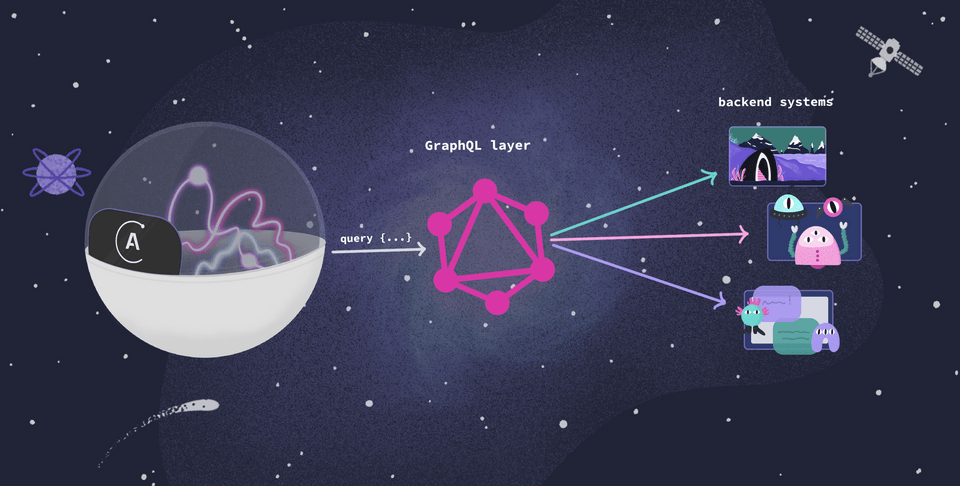

The Apollo MCP server is an MCP server implementation that's built to work with GraphQL right from the start.

With GraphQL, we can write specific yet robust queries that request data from multiple services all at once. In fact, GraphQL does the heavy-lifting of API orchestration for connecting your API to AI systems: it helps ensure policy are enforced, APIs are called in the right sequence, only the appropriate data is used and returned, and more! In the Apollo MCP server, we use these queries as the operations behind each tool. The actual implementation details of the backend stay hidden from an assistant's prying eyes; instead, the server uses the self-documenting API schema to tell the assistant everything it needs to know about each tool's purpose.

We'll get the MCP server, along with our graph and AI assistant, set up in the next lesson.

Learner prerequisites

Before you get started, here are the prerequisite technologies and tools you should be familiar with.

- Basic knowledge of GraphQL, including Schema Definition Language (SDL) and queries

- Basic familiarity with LLMs and AI assistants such as Claude or ChatGPT

- Basic understanding of APIs and client-server communication

- A code editor (we're using VS Code)

- Node (v18 or later)

Need to brush up on your GraphQL? Check out one of our introductory courses to review the basics. We won't focus on GraphQL syntax in this course, but we will run a few queries.

Practice

Drag items from this box to the blanks above

functions

full access

limited access

tools

communication

make changes with admin permissions

logging

invoke certain actions

admin permissions

options

Key takeaways

- The Model Context Protocol allows a server to expose particular tools an AI assistant can invoke to resolve user requests.

- An MCP server acts as the middleman between an AI assistant and backend services or APIs. When the assistant invokes a tool, the MCP server communicates that operation or request to the relevant service or API.

- Each tool comes with a

name,description, andinputSchema. These properties provide the assistant with the information it uses to determine when it should invoke a tool, and what inputs are required. - Tool definitions can also include optional annotations. Annotations provide more detail about a tool's behavior.

- With the Apollo MCP server, we can equip assistants with tools that run specific yet robust GraphQL queries behind the scenes, capable of fetching data from multiple data sources at once.

Up next

It's more fun to see all this in action. In the next lesson, we'll get our project set up, using Claude as our AI assistant to chat about space travels!

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.