Overview

Let's first get our API running. From there we'll define some operations and make our first tools.

In this lesson, we will:

- Inspect how communication will occur between API, server, and assistant

- Configure the

rover devprocess - Launch the API and the server

The MCP server

To facilitate communication between APIs and assistants, the MCP server needs to be able to communicate with both. Let's start by looking at how the MCP server communicates with an AI assistant—specifically, how it exposes various actions the assistant can use.

Server to assistant communication

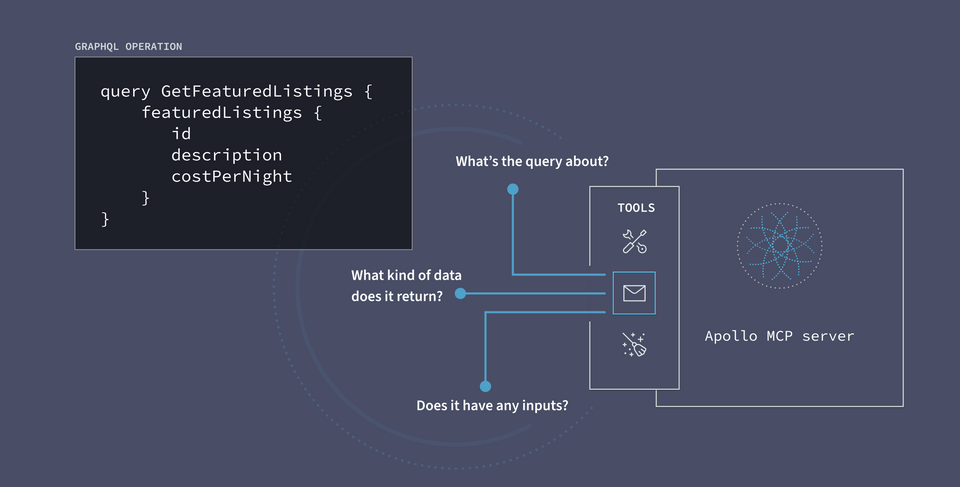

When we start up the MCP server, it will expose a series of tools. Because we'll be fetching our data from a GraphQL API, the logic for each tool will consist of a pre-written GraphQL query.

GraphQL operations (queries and mutations) are declarative in nature, which makes them really good at documenting themselves. The MCP server can look at each operation, along with the schema it's based on, and put together something like a summary for each corresponding tool: its name, the gist of what it's used for, the kind of data it returns, and any inputs it requires.

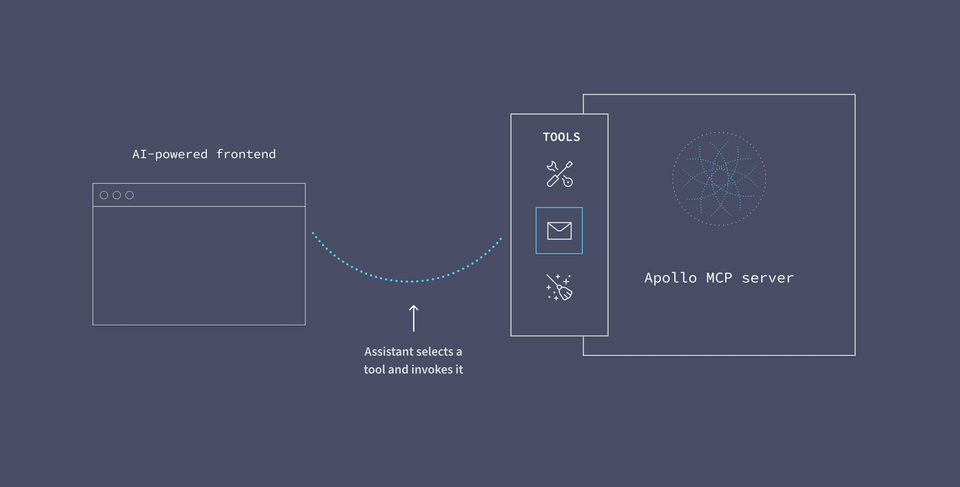

The "summary" for each tool, rather than the full-detail query itself, can be all that an AI assistant needs to solve a problem. It doesn't need to run the query directly, but rather can use its understanding of each tool's purpose to pick the right one for the job.

From there, the MCP server handles the actual execution of the logic we've packaged up for that tool. In this case, it will utilize its other line of communication—and run a GraphQL query against our API!

Each tool we expose from the MCP server can open up all kinds of new possibilities in our user-assistant interactions. But by pre-defining the queries that are executed against our API, we lock down the possible avenues to data to just those that we've permitted.

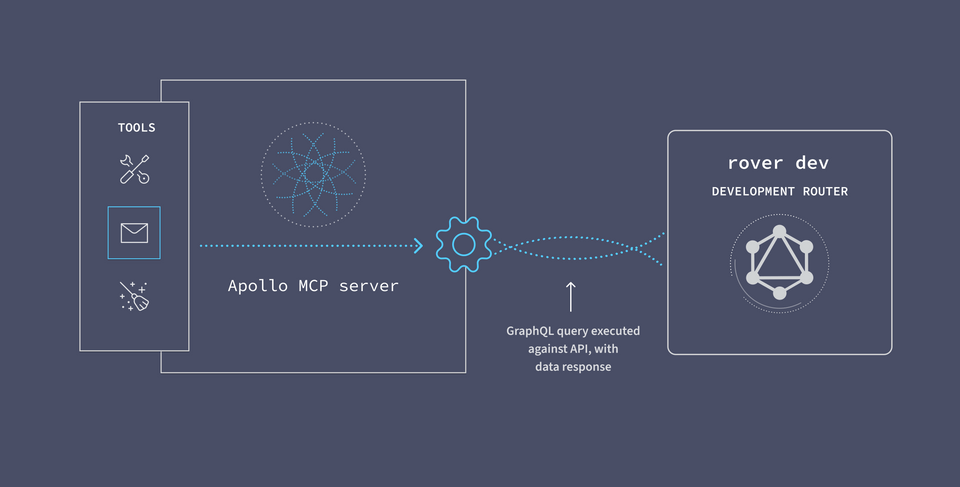

Server to API communication

On the other side of the equation, we need our MCP server to be able to communicate with our listings API. We can execute queries against the API by sending them to the local router.

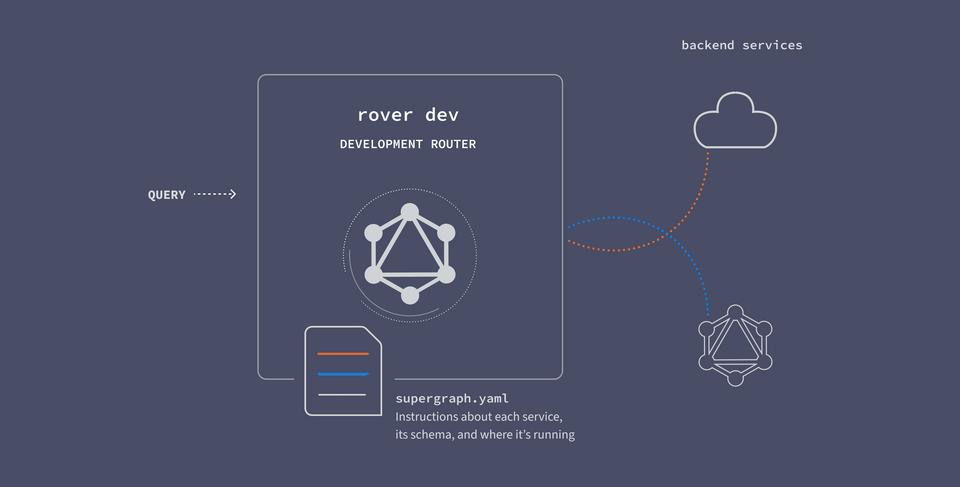

The router works by receiving GraphQL queries, breaking them up across the responsible backend services, and returning the response. When we boot up a local router for development using rover dev, it works the same way: it uses the supergraph.yaml to understand where it should route each piece of a query.

We can establish a connection between our router and the MCP server by starting them up at the same time, using rover dev. These are still two separate processes, but we can start them up at once to streamline our development process.

So with both pieces running together, our communication channel is created automatically!

The Rover CLI: from API to MCP

We can use Rover to start up both processes (API and MCP server) at once. By passing a relevant flag (--mcp, to be exact), we can tell Rover that we want to start up an MCP server alongside our API, with the two automatically configured to communicate.

To do this, we'll be using the rover dev command, which will enable us to do two things:

- Start up a local router process, which will receive and resolve GraphQL requests

- Launch the MCP server, which will facilitate communication between AI assistants and the local router (our API and backend services)

Let's do it!

rover dev

Let's look at the rover dev command that will make this magic happen.

We'll specify the path to the supergraph.yaml file so that our router knows where to find our backend services. We'll also add the --mcp flag to boot up the MCP server at the same time.

In a terminal opened to the root of the odyssey-apollo-mcp folder, run the following command.

rover dev --supergraph-config ./supergraph.yaml --mcp

We'll see some output indicating that a session is starting up. We'll see a message that our supergraph is running, but then... oops! The process crashes!

Here's the error we'll see:

Error: You must define operations or enable introspection

And this error message is exactly right: we haven't actually defined any operations in our MCP server that can be represented as tools to present to our assistant. We'll define our first tool next.

Practice

Key takeaways

- The

rover devprocess launches a local router we can use to query all of the services in our API. - To run properly, the local router requires some configuration in the form of a YAML file.

- This file defines not only which services should be incorporated as part of our comprehensive API, but their schema locations and

routing_urls as well. - By passing the

--mcpflag to therover devprocess, we can start up an MCP server at the same time as our API.

Up next

Our MCP server needs some operations to present as tools to AI assistants. Let's fix that up in the next lesson, and see what's happening under the hood.

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.